First Steps for Understanding Azure AKS

Azure Learning Path for Cloud and DevOps Engineers

📝Introduction

We walked through with a simple explanation for starting to understand Azure AKS.

📝What is a container?

A container is a lightweight, standalone, executable package of software that includes everything needed to run a piece of software, including the code, runtime, system tools, libraries, and settings.

Containers isolate the software from its environment and ensure it works uniformly despite differences, for instance, between development and staging environments.

These are the most common technologies and tools related to containers include:

Docker: One of the most popular containerization platforms. Docker provides tools to create, deploy, and manage containers.

Kubernetes: An open-source platform designed to automate the deployment, scaling, and operation of containerized applications.

Containerd and CRI-O: Container runtimes that provide core functionalities to manage container lifecycle and operations, often used as components of larger systems like Kubernetes.

📝Why use a container?

Containers offer a robust, efficient, and flexible approach to developing, deploying, and managing applications, making them a preferred choice for modern software development practices.

Examples of its use:

Isolation: Containers provide a way to isolate applications and their dependencies from the host system and other containers. This isolation helps in preventing conflicts between different applications or different versions of the same application.

Lightweight: Containers share the host system's OS kernel, making them more lightweight compared to virtual machines (VMs), which include a full guest operating system. This efficiency allows for faster startup times and reduced overhead.

Portability: Containers encapsulate all necessary components to run the application, making them portable across different environments. This means you can develop an application on your local machine and then run it in the same way on a cloud server or another developer's machine.

Consistency: By packaging the application with its dependencies, containers ensure that the application will run the same way, regardless of where it is deployed. This helps in avoiding the "it works on my machine" problem.

Microservices: Containers are often used to implement microservices architecture, where an application is broken down into smaller, loosely coupled services. Each service can be developed, deployed, and scaled independently.

📝Difference between VMs vs Containers

They are both technologies used to run applications in isolated environments, but they differ significantly in terms of architecture, resource efficiency, and use cases.

VM has maintenance overhead that increases as you scale. VM operating system (OS) versions and dependencies for each application need to be provisioned and configured to match.

When you apply upgrades for your applications that affect the OS and major changes, there are precautions. If any errors appear during the upgrade, the rollback of the installation is required and causes disruption, such as downtime or delays.

However, the Containers concept gives us some major benefits compared to VM, like:

Immutability - The unchanging nature of a container allows it to be deployed and run reliably with the same behavior from one compute environment to another. A container image tested in a QA environment is the same container image deployed to production.

Smaller Size - A container is similar to a VM, but without the kernel for each machine. Instead, they share a host kernel. VMs use a large image file to store both the OS and the application you want to run. In contrast, a container doesn't need an OS, only the application.

Lightweight - The container always relies on the host installed OS for kernel-specific services. The lightweight property makes containers less resource-intensive, so installing multiple containers is possible within the same compute environment.

Startup is fast - Containers start up in few seconds, unlike VMs, which can take minutes to start.

Understanding these differences helps in choosing the right technology based on the specific needs of the application, performance requirements, and infrastructure constraints.

📝What is container management?

It is the process of organizing, deploying, and maintaining containers, which are lightweight, portable, and self-sufficient environments that include an application and its dependencies.

Container management tools and platforms are crucial for modern cloud-native applications, providing the necessary infrastructure to support microservices architectures and DevOps practices.

Benefits of Container Management:

Scalability: Easily scale applications up or down based on demand.

Portability: Containers can run on any system that supports the container runtime, ensuring consistency across different environments.

Efficiency: Containers share the host system’s kernel, which makes them more lightweight than virtual machines, leading to better resource utilization.

Isolation: Containers provide process and namespace isolation, which enhances security and reduces the risk of conflicts between applications.

📝What is Kubernetes?

It is a portable, extensible open-source platform for automating deployment, scaling, and the management of containerized workloads.

Kubernetes is sometimes abbreviated to K8s.

Kubernetes allows you to view your data center as one large computer. We don't worry about how and where we deploy our containers, only about deploying and scaling our applications as needed.

Here are some additional aspects to keep in mind about Kubernetes:

Kubernetes isn't a full PaaS offering. It operates at the container level and offers only a common set of PaaS features.

Kubernetes isn't monolithic. It's not a single application that is installed. Aspects such as deployment, scaling, load balancing, logging, and monitoring are all optional.

Kubernetes doesn't limit the types of applications to run. If your application can run in a container, it runs on Kubernetes.

Your developers need to understand concepts such as microservices architecture to make optimal use of container solutions.

Kubernetes doesn't provide middleware, data-processing frameworks, databases, caches, or cluster storage systems. All these items are run as containers or as part of another service offering.

A Kubernetes deployment is configured as a cluster. A cluster consists of at least one primary machine or control plane and one or more worker machines. For production deployments, the preferred configuration is a high-availability deployment with three to five replicated control plane machines. These worker machines are also called nodes or agent nodes.

That orchestration platform gives us the same ease of use and flexibility as with Platform as a Service (PaaS) and Infrastructure as a Service (IaaS) offerings.

📝Kubernetes Architecture Detailed

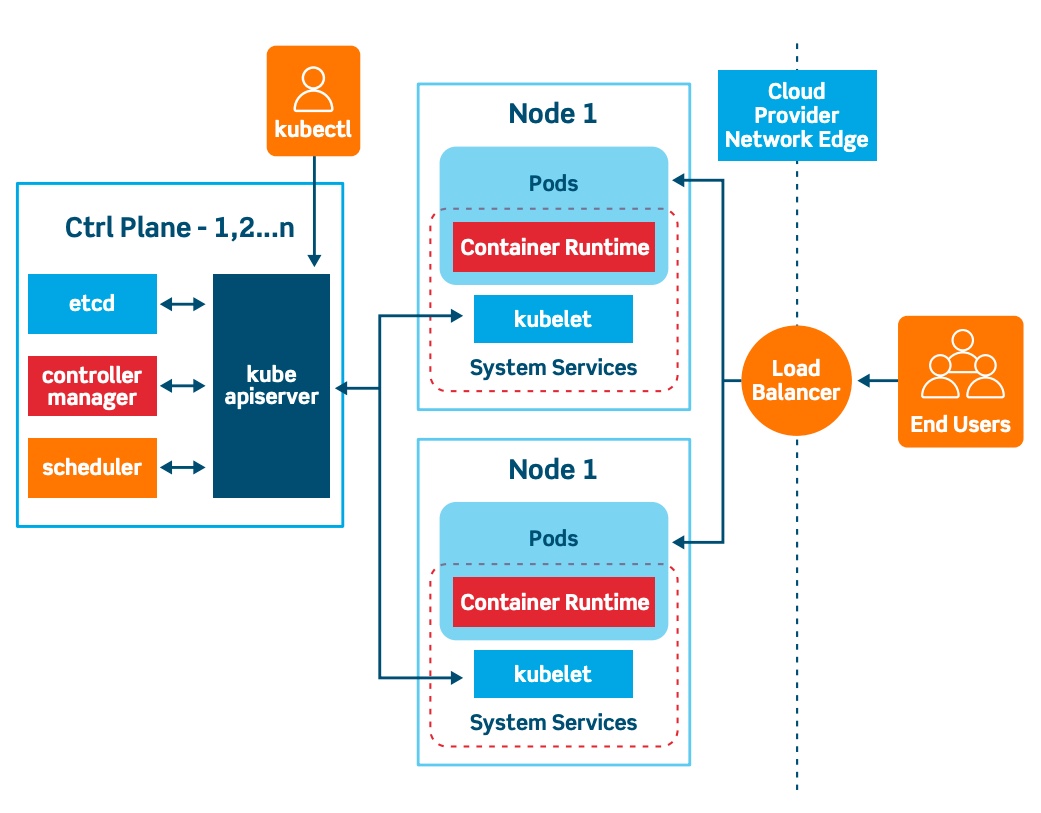

A Kubernetes cluster is composed of two separate planes:

Kubernetes control plane (Master Nodes)- manages Kubernetes clusters and the workloads running on them. Include components like the API Server, Scheduler, and Controller Manager.Kubernetes data plane (worker Nodes)- machines that can run containerized workloads. Each node is managed by the kubelet, an agent that receives commands from the control plane.

In addition, Kubernetes environments have the following key components:

Pods- They are the smallest unit provided by Kubernetes to manage containerized workloads. A pod typically includes several containers, which together form a functional unit or microservice.Persistent storage- The local storage on Kubernetes nodes is ephemeral, and is deleted when a pod shuts down. This can make it difficult to run stateful applications. Kubernetes provides the Persistent Volumes (PV) mechanism, allowing containerized applications to store data beyond the lifetime of a pod or node.

The Core components of the Kubernetes Control Plane(Master Nodes) are:

API Server- Provides an API that serves as the front end of a Kubernetes control plane. It is responsible for handling external and internal requests—determining whether a request is valid and then processing it. The API can be accessed via the kubectl command-line interface or other tools like kubeadm, and via REST calls.Scheduler- This component is responsible for scheduling pods on specific nodes according to automated workflows and user defined conditions, which can include resource requests, concerns like affinity and taints or tolerations, priority, persistent volumes (PV), and more.Kubernetes Controller Manager- It is a control loop that monitors and regulates the state of a Kubernetes cluster. It receives information about the current state of the cluster and objects within it, and sends instructions to move the cluster towards the cluster operator’s desired state.The controller manager is also responsible for several controllers that handle various automated activities at the cluster or pod level, including replication controller, namespace controller, service accounts controller, deployment, statefulset, and daemonset.

etcd- A key-value database that contains data about your cluster state and configuration. Etcd is fault tolerant and distributed.Cloud Controller Manager- This component can embed cloud-specific control logic – for example, it can access the cloud provider’s load balancer service. It enables you to connect a Kubernetes cluster with the API of a cloud provider. Additionally, it helps decouple the Kubernetes cluster from components that interact with a cloud platform, so that elements inside the cluster do not need to be aware of the implementation specifics of each cloud provider.This cloud-controller-manager runs only controllers specific to the cloud provider. It is not required for on-premises Kubernetes environments. It uses multiple, yet logically-independent, control loops that are combined into one binary, which can run as a single process. It can be used to add scale a cluster by adding more nodes on cloud VMs, and leverage cloud provider high availability and load balancing capabilities to improve resilience and performance.

The Core components of the Kubernetes Data Plane (Worker Nodes) are:

Nodes- Nodes are physical or virtual machines that can run pods as part of a Kubernetes cluster. A cluster can scale up to 5000 nodes. To scale a cluster’s capacity, you can add more nodes.Pods- A pod serves as a single application instance, and is considered the smallest unit in the object model of Kubernetes. Each pod consists of one or more tightly coupled containers, and configurations that govern how containers should run. To run stateful applications, you can connect pods to persistent storage, using Kubernetes Persistent Volumes.Container Runtime Engine- Each node comes with a container runtime engine, which is responsible for running containers. Docker is a popular container runtime engine, but Kubernetes supports other runtimes that are compliant with Open Container Initiative, including CRI-O and rkt.kubelet- Each node contains a kubelet, which is a small application that can communicate with the Kubernetes control plane. The kubelet is responsible for ensuring that containers specified in pod configuration are running on a specific node, and manages their lifecycle. It executes the actions commanded by your control plane.kube-proxy- All compute nodes contain kube-proxy, a network proxy that facilitates Kubernetes networking services. It handles all network communications outside and inside the cluster, forwarding traffic or replying on the packet filtering layer of the operating system.Container Networking- Container networking enables containers to communicate with hosts or other containers. It is often achieved by using the container networking interface (CNI), which is a joint initiative by Kubernetes, Apache Mesos, Cloud Foundry, Red Hat OpenShift, and others.CNI offers a standardized, minimal specification for network connectivity in containers. You can use the CNI plugin by passing the kubelet –network-plugin=cni command-line option. The kubelet can then read files from –cni-conf-dir and use the CNI configuration when setting up networking for each pod.

📝What is the Azure Kubernetes Service (AKS)?

After, the above simple review of Container and Kubernetes, we can learn a little about Azure AKS.

The AKS manages your hosted Kubernetes environment and makes it simple to deploy and manage containerized applications in Azure.

Your AKS environment is enabled with features such as automated updates, self-healing, and easy scaling. Azure manages the control plane of your Kubernetes cluster for free. You manage the agent nodes in the cluster and only pay for the VMs on which your nodes run.

The Cluster can be created and managed in the Azure portal or with the Azure CLI.

There are Resource Manager templates to automate cluster creation, giving access to features such as advanced networking options, Microsoft Entra Identity, and resource monitoring, where you can set up triggers and events to automate the cluster deployment for multiple scenarios.

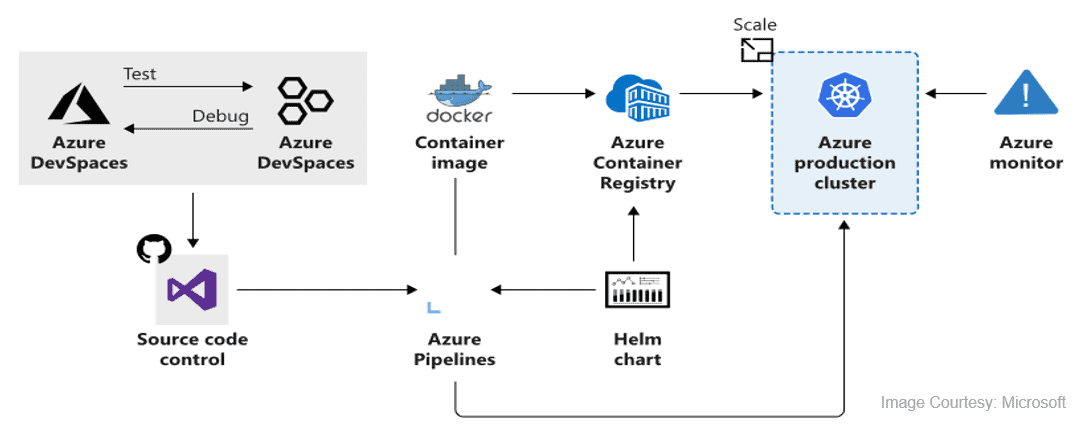

📝Workloads developed and deployed to AKS

The AKS supports the Docker image format. With a Docker image, you can use any development environment to create a workload, package the workload as a container, and deploy the container as a Kubernetes pod.

Here you use the standard Kubernetes command-line tools or the Azure CLI to manage your deployments. The support for the standard Kubernetes tools ensures that you don't need to change your current workflow to support an existing Kubernetes migration to AKS.

AKS also supports popular development and management tools such as Helm, Draft, the Kubernetes extension for Visual Studio Code, and Visual Studio Kubernetes Tools.

The Bridge to Kubernetes feature, allows you to run and debug code on your development computer, while still being connected to your Kubernetes cluster and the rest of your application or services.

With Bridge to Kubernetes, you can:

Avoid having to build and deploy code to your cluster. Instead, you create a direct connection from your development computer to your cluster. That connection allows you to quickly test and develop your service in the context of the full application without creating a Docker or Kubernetes configuration for that purpose.

Redirect traffic between your connected Kubernetes cluster and your development computer. The bridge allows code on your development computer and services running in your Kubernetes cluster to communicate as if they are in the same Kubernetes cluster.

Replicate environment variables and mounted volumes available to pods in your Kubernetes cluster to your development computer. With Bridge to Kubernetes, you can modify your code without having to replicate those dependencies manually.

Also, the AKS allows us to integrate any Azure service offering and use it as part of an AKS cluster solution.

Congratulations — you have completed this simple explanation for starting to understand Azure AKS.

Thank you for reading. I hope you understood and learned something helpful from my blog.

Please follow me on Cloud&DevOpsLearn and LinkedIn, franciscojblsouza